Deep Learning Approach To Multiple Ocular Disease Detection From Retinal Images

Minh-Chuong Huynh, Hoa Nguyen, Quang Nguyen

Motivation

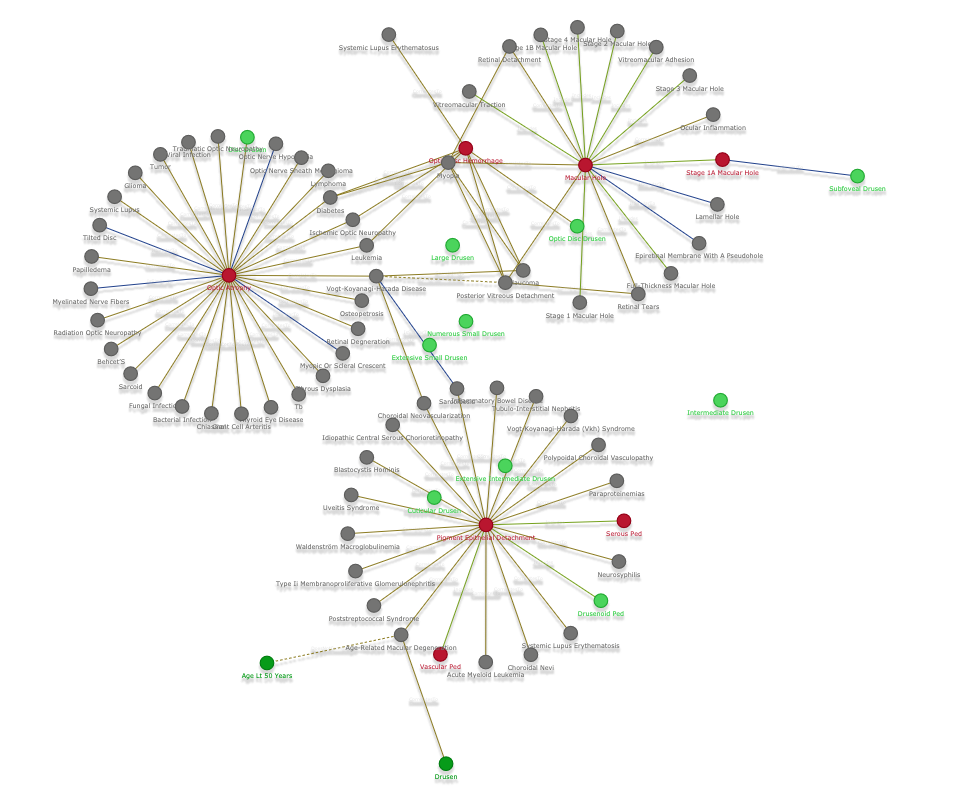

In 2018, approximately 1.3 billion people suffered from vision impairments of which 80% are considered avoidable (WHO). Early detection and proper treatment may cease the pathological progression, thereby avoiding blindness. Unfortunately, the shortage of skilled ophthalmologists and facilities in developing and under-developed countries prevents patients from the access to eyecare services. An autonomous screening system to detect ocular diseases is a feasible solution for the mentioned problem. However, current deep learning models based on color retinal images only address a single disease, specifically diabetic retinopathy, while a patient can have multiple diseases concomitantly. As a result, the screening tools will miss many possible diseases. Hence, it is necessary to establish a screening system that takes all possible diseases into consideration.

Objective

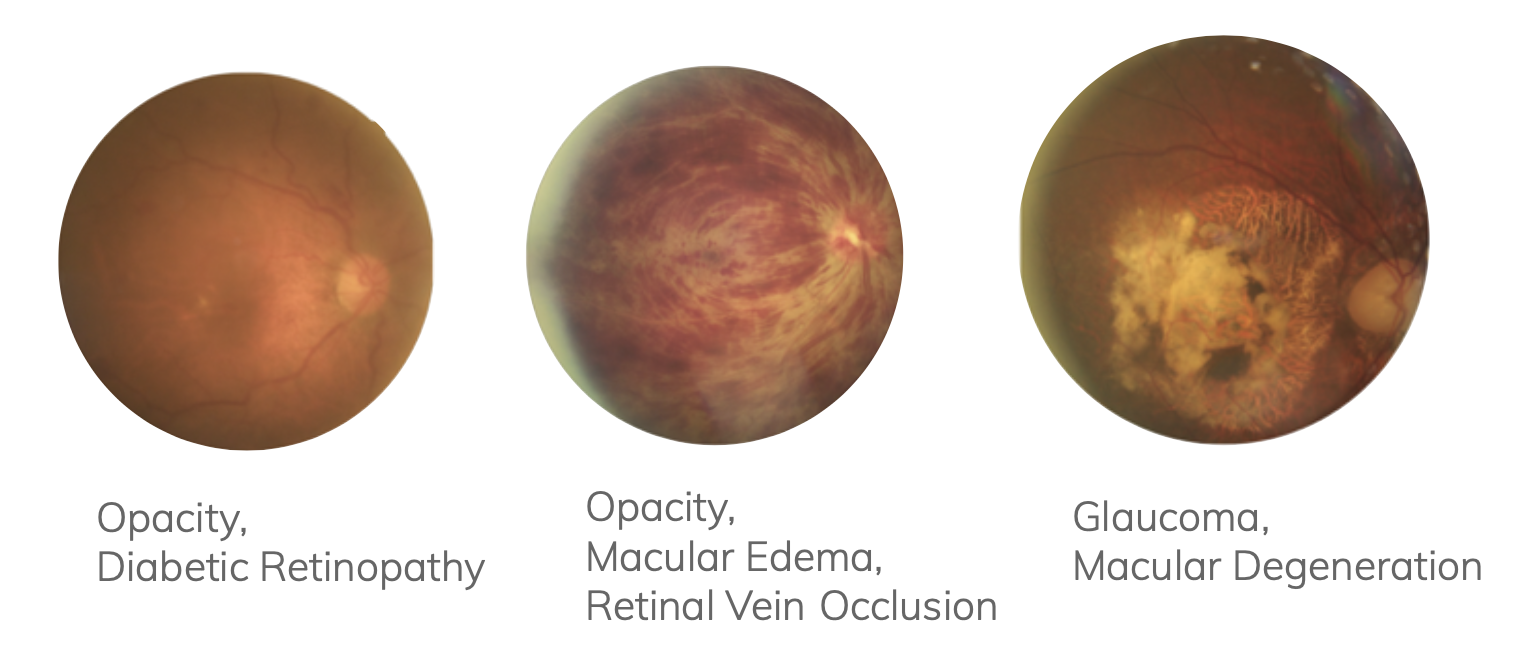

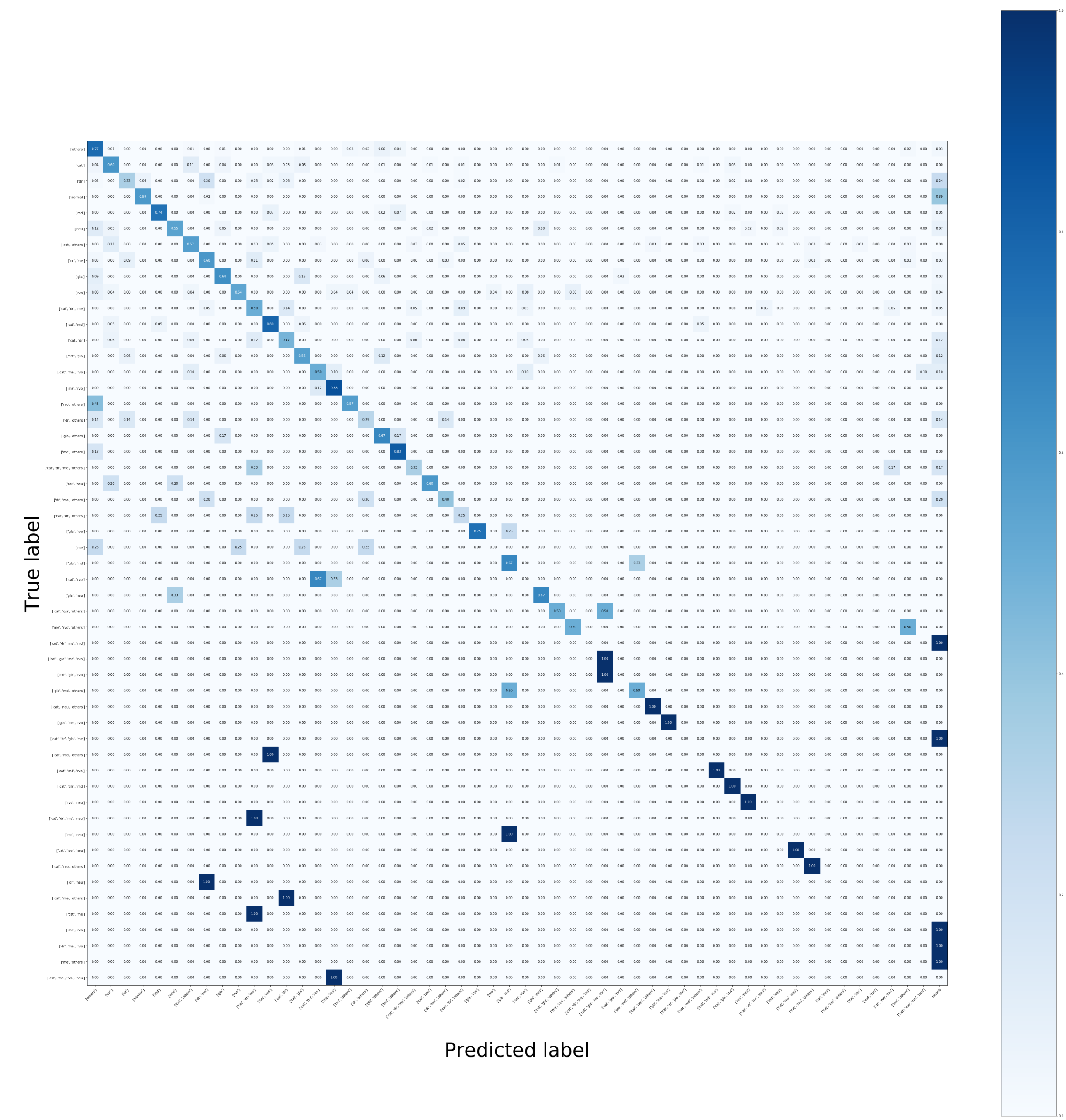

Color fundus images from the database of Cao Thang International Eye Hospital (CTEH) were leveraged to build a multi-label classification model to detect multiple ocular diseases. This research is aimed to detect whether images are normal or have at least one among 8 following common diseases: cataract, diabetic retinopathy, glaucoma, macular edema, macular degeneration, retinal vascular occlusion, optic neuritis/neuropathy, and others.

Methodology

Dataset

The whole dataset includes 5,943 disease images from CTEH, 515 normal and 585 abnormal images from Messidor dataset (Decenciere et al., 2014). The train, validation, and test set are splited as the table below.

While normal class comprises of normal images from healthy eyes, others class includes rare diseases in CTEH dataset: posterior uveitis, macular pucker, myopia, posterio capsular opacification, eye infections, vitreous/ retinal hemorrhage, hereditary retinal dystrophy, retinal detachment/breaks, central serous chorioretinopathy, other disorders on fundus, laser scars, large cup, glaucoma suspect, chorioretinal atrophy, hypertensive retinopathy, and chorioretinal neovascularization

To evaluate model in Vietnamese Healthcare system, the test set contains only images from CTEH.

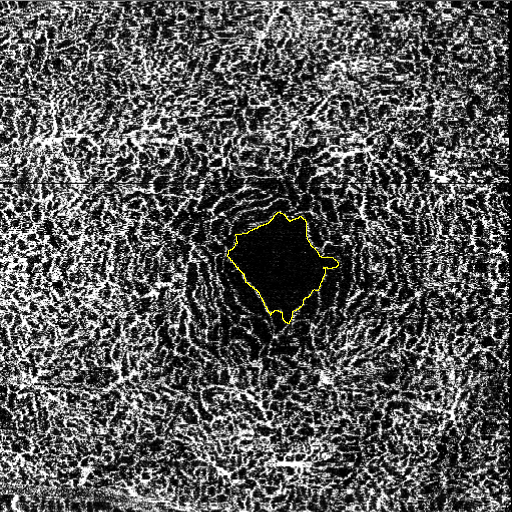

Model

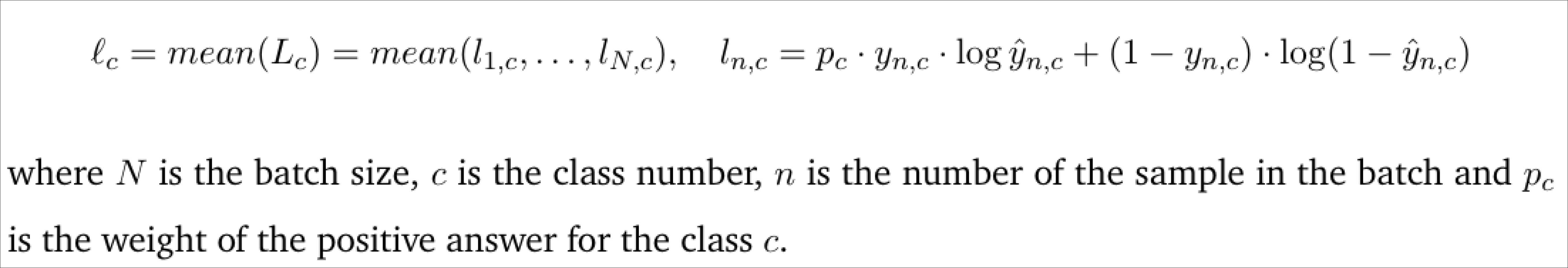

The last layer of EfficientNet-B3 (Tan and Le, 2019) pretrained on ImageNet is replaced with a 9-node fully connected layer. In training, the learning rate is initiated at 1e-3 and decreased 10 times after 5 non-improved epochs. Realtime augmentation is applied to prevent overfitting. Moreover, the loss function is modified to solve the imbalancing of the dataset. To help the result more reasonable, the saliency map is provided to ophthalmologists.

Results

The model was evaluated by example-based metrics and label-based metrics. Balanced accuracy was calculated for each label to handle with the imbalance of positives and negatives.

Example-based Metrics

- Exact match ratio: 0.59

- Accuracy: 0.76

- Recall: 0.89

- Specificity: 0.95

- Precision: 0.79

- F1-score: 0.82

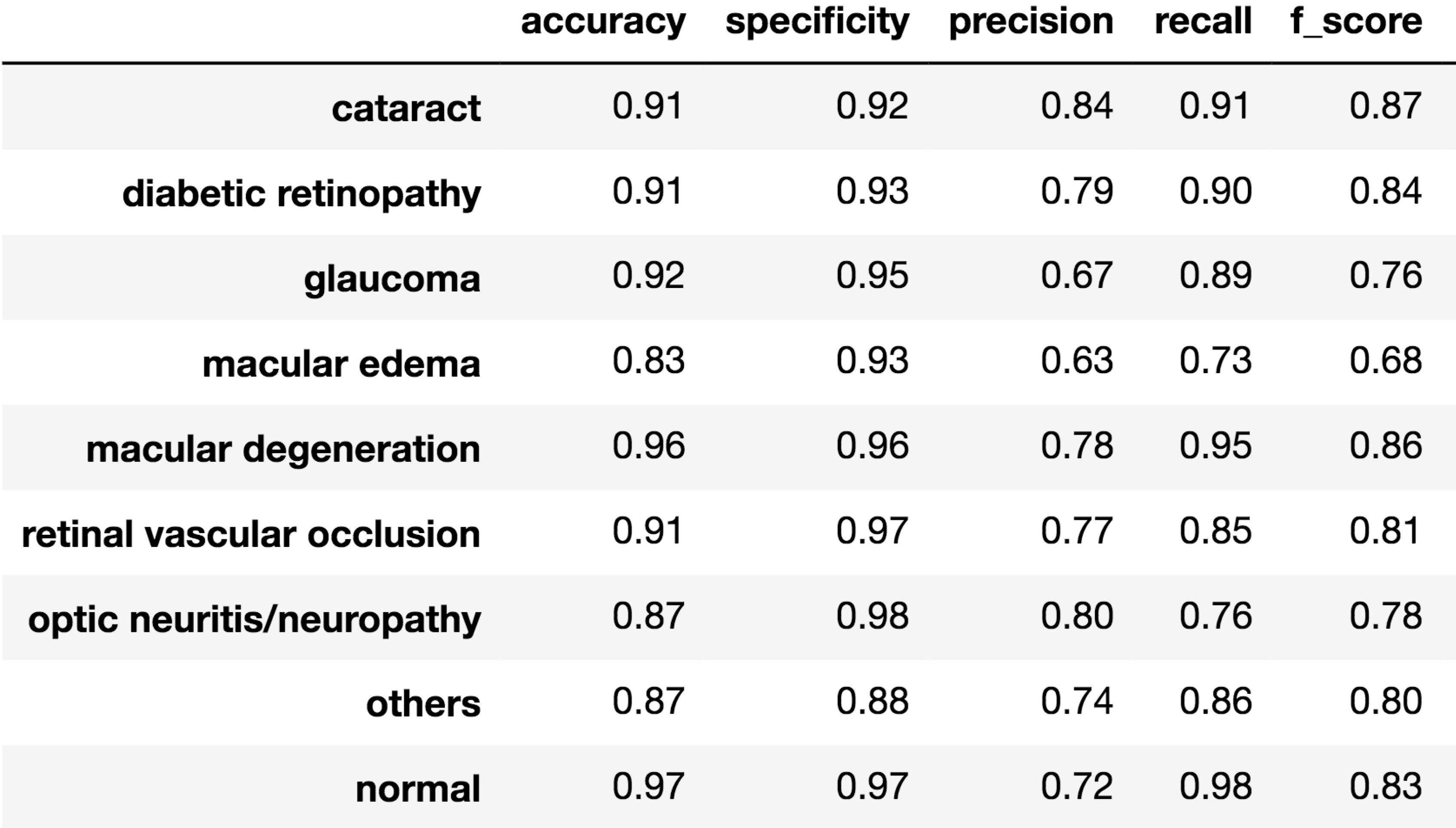

Label-based Metrics

Conclusion

The EfficientNet-B3 performs very well on this task with only 6.000 images, achieving the average F-1 score of 0.82. Moreover, there are 6/9 classes having the score larger than 0.8 and the lowest score is 0.68.

The current model is integrated to CTEH system to validate its affection to doctors. In the future, with more data collected, we expect that the model can reach the F-1 score of 0.9 for all diseases.